Program Assessment Steps

Assessment is good educational practice because it demonstrates what you are already

doing, improving your

program through continual monitoring of student learning. Assessment is part of the

accreditation process, as the ability to demonstrate continued quality improvement

and the utilization of assessment outcomes for planning and budget is critical to

a healthy program.

NWCCU 2020 Standards for Accreditation

Standard 1.C - Student Learning

1.C.5 The institution engages in an effective system of assessment to evaluate the quality of learning in its programs. The institution recognizes the central role of faculty to establish curricula, assess student learning, and improve instructional programs.

1.C.7 The institution uses the results of its assessment efforts to inform academic and learning-support planning and practices to continuously improve student learning outcomes.

The assessment process requires the participation of all instructional faculty members,

and with a well designed

assessment process not only is the time well spent, the information gathered is valuable

and (even more

important) useful to faculty. The purpose of this document is to provide an overview

of the assessment process.

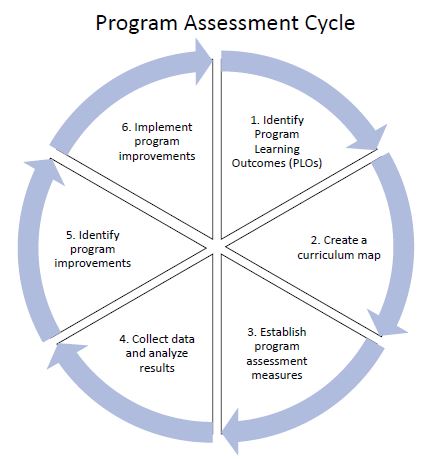

The basic assessment process for a degree program is diagrammed below:

The process is designed around continuous improvement, and is based on an annual cycle

(although not all

outcomes need to be assessed every year). An ideal cycle is to parallel the program

review, which is on a seven year cycle for most programs. The information gathered

yearly in program assessment should culminate in the information required for program

review. Consequently, annual assessmentshould be seen as documentation to make the

process of programreview more effective.

Learning outcomes can be written for a course, a program, or an institution. This

document focuses specifically on

learning outcomes for programs (e.g., degree programs). In many cases, these are easier

to write than course

learning outcomes, because they are typically less specific. However, well-designed

outcomes should relate to each

other.

Course Level – “Demonstrate an understanding of social psychology theories from a sociological perspective.”

Program Level – “Sociological Concepts. Our students will demonstrate a knowledge, comprehension, and relevance of core sociological concepts.”

Institutional Level (General Education) – “Develop a critical appreciation of the ways in which we gain and apply

knowledge and understanding of the universe, of society, and of ourselves.”

We don’t typically map our learning outcomes all the way up to Institutional Level

(although programs

that include Core courses may benefit from this activity). However, mapping course

outcomes into

program outcomes is valuable in designing and evaluating outcomes. This is done in

the Courseleaf CIM system and as a part of the annual program assessment reporting

process.

Steps in the Assessment Process

At the program level, learning outcomes should be written as simple declarative statements. Overly complex or convoluted statements become very difficult to assess. Ideally, PLO’s should not exceed five (although someprograms have external accreditation requirements that set the PLO’s). Also, the PLO’s should be program specific,general outcomes are covered in CORE 2.0 Assessment.

Program Learning outcomes are the characteristics or skills that each student is expected to acquire by completion of their educational program.

By starting program learning outcomes with phrases such as “students completing our program will” you help ensure that the focus is on student learning and abilities defined by the program.

PLO’s should focus on the expected capabilities of the students upon successful completion of the program (hence the “will” in the starter phrase), not on the actual performance determined at the end of the program. Because PLO’s are much broader in scope than course outcomes, a common set of outcomes will include:

1. An outcome related to having the requisite knowledge for a program, or related function of a professional in the program.

2. An outcome related to critical thinking and higher-lever cognitive skills as it pertains to use within the program.

3. An outcome related to the ability communicate within the vernacular of the program.

4. An outcome related to ethical decision making.

While programs are certainly not required to use these outcomes, they are very common in some form. You will notice that all of these outcomes can be assessed even in lower level classes, with possibility the exception of #1.

If this set were used, the program outcomes might be written as (student completing our program will be able to):

1) Analyze problems in their field and develop solutions or strategies to solve those problems.

2) Communicate effectively and accurately within the vernacular of the program.

3) Apply the discipline’s code of ethics when making decisions.

4) Design an experiment and analyze data.

The goal is to keep the number of program outcomes to a workable number, they have to be fairly general, but still pertinent to the program. The specificity comes during the assessment process, because the components of each outcome can be assessed separately.

Create a matrix with Program Learning Outcomes along the X axis and courses along the Y axis. Identify the courses in which Program Learning Outcomes are addressed.

Program learning outcomes can be addressed in each course based upon level of complexity (introductory, developing, mastery). While courses typically build upon the previous content, it is appropriate to address them iteratively for review or reinforcement.

By assessing classes that demonstrate “Introductory”, “Discovery” and “Mastery” levels, a program may better identify possible concerns in their curriculum pathways.

Gather indications of student learning that will be used in the assessment process to determine if and to what extent students are learning the intended program outcomes. Examples of these indicators are samples of submitted papers, responses to specific questions on exams, or e-portfolios. Grades have limited use in program assessment since they often include measures that aren’t directly related to student learning (i.e. attendance) and they can be too broad to be able to assess a specific learning outcome.

Developing a rubric for faculty to evaluate these samples is a useful and helpful practice.

Scoring rubrics are used to identify desired characteristics in student examples, and assign numerical scores to the indicators. For example, common characteristics associated with written communications include organization, clarity, grammar, and punctuation. The grammar and punctuation category might have the following options:

a. Unacceptable (0) – Five or more grammar or punctuation errors per page.

b. Marginal (1) – Three or four grammar or punctuation errors per page.

c. Acceptable (2) – One or two grammar or punctuation errors per page.

d. Exceptional (3) – Very few grammar or punctuation errors in the report.

OR

a. Unacceptable (0) – Five or more grammar or punctuation errors per page.

b. Introductory (1) – Three or four grammar or punctuation errors per page.

c. Developing (2) – One or two grammar or punctuation errors per page.

d. Mastery (3) – Very few grammar or punctuation errors in the report.

When developing the scoring rubrics, it is important to consider the skill level that

you expect your students to

achieve in your program. Typically, students completing college programs should have

attained a high level of

performance in most of the program learning outcomes. That is, they should have a

well-developed cognitive skill level in most areas.

To think about cognitive skill level, consider this abbreviated table of (old) Bloom Taxonomy verbs:

I: Introductory Level

- defines, describes, distinguishes, identifies, interprets, knows, summarizes, lists, recognizes

D: Developing Level

- applies, analyzes, computes, compares, demonstrates, contrasts, prepares, distinguishes, solves

M: Mastery Level

- categorizes, concludes, composes, critiques, creates, defends, devises, evaluates, designs, interprets, modifies, justifies

Using the example above, students in introductory level freshman courses should be obtaining a level 1 to 2, whereas sophomore level students should be at 2 to 3, and junior and senior classes should be at 3 to 4. The way you demonstrate thatstudents have the appropriate level skills is using the verbs in your scoring rubrics.

Each program learning outcome will need to be assigned a threshold. The design of the threshold will depend on the nature of the rubric.

The development of an effective threshold may take some adjustments, but should reflect the appropriate level of learning for all assessed courses.

The indicators of student learning are typically related to:

• Examples of student work from courses

• Standardized questions embedded in examinations

• Results of pre- and post-testing

Remember: Summative results such as scores on exams or course grades are not used as assessment indicators – but results on a specific question or small set of questions may be used.

It is recommended to make use of samples of student work to control the time required to score the data. However, in small sized classes the whole class may be assessed. Population or unbiased sample of collected assignments are scored by at least two faculty members using scoring rubrics.

The faculty member that collects the samples of student work (from his or her course) should not also do the scoring. Other faculty members (or other qualified individuals) should score the student samples.

Establish a schedule for small and whole group faculty data analysis and program improvement meetings. An important part of the assessment plan is to have an opportunity for faculty to discuss and evaluate where changes might need to be made based on the results of the assessment. These conversations and resulting adjustments probably already occur regularly, but the process of program assessment makes those efforts more intentional and allows those changes to be documented.

Scores are presented at a program/unit faculty meeting for assessment. Faculty will then review the assessment results, and respond accordingly. For example:

- If an acceptable performance threshold has not been met, possible responses:

- Gather additional data to verify or refute the result.

- Change something in the curriculum to try to address the problem

- Change the acceptable performance threshold, reassess

- Choose a different assignment to assess the outcome

- If acceptable performance threshold has been met, possible responses:

- Faculty may reconsider thresholds

- Evaluate the rubric to assure outcomes meet student skill level (example – classes with differing learning outcomes based on student level)

- Use Bloom’s Taxonomy to evaluate learning outcomes

Implement the program revisions discussed and decided on. Examples include: course sequence revisions; addition or removal of courses; field experience restructure; revision of assessments/rubric adjustments; moving course LOs among courses; eliminating duplication of course content; and program/course learning outcome adjustment.

In the next assessment cycle, demonstrate the impact of the assessment response. These reports are ongoing, they will reflect what has been done, and what will be done – ultimately showing progressive and continued improvement.

These reports will be submitted annually to the Program Assessment and Outcomes Committee, which will review the faculty’s assessments and responses. The Assessment and Outcomes Committee leads and facilitates authentic assessment for all undergraduate and graduate degree programs. The committee reviews Annual Program Assessments that provide the strong foundation upon which Montana State University develops, identifies, and documents quality improvement plans and goals including providing the institutional reporting associated with the strategic planning objectives.